Blog-- Thoughts on data analysis, software development and innovation management. Comments are welcome Post 84 The value-added and the value-perceived of an MBA16-Feb-2014I was the other day riding a high-speed train to Madrid when I couldn't help hearing a conversation between two business-men that were sitting next to me (besides, they were speaking rather loudly). They were discussing the use (and value) of an MBA in the business world. That inevitably caught my attention as I've just enrolled in one this year (which takes all my spare time and keeps me from writing here, I apologise for the poor throughput of posts lately). One of them supported how useful it always is to have such a pragmatic view of a business, while the other thought that it is the other way around, that such a tight focus is a double-edged sword, and that it all comes down to the competence of the candidate, sometimes for the better, and the other times for the worse. That left me a little puzzled. I had already asked this question to HakerNews some time ago, and the answers I got were somewhat negative. Then why did I still enrol? I guess the first thing that comes to my mind is my obstinate passion to be learning continuously and improving my skills. I already have a technical education, experience and background that allows me to face technical problems with confidence (I end up solving the problems I already know how to solve, or else I first learn from the literature and then I find a practical solution), but I feel my professional career needs a veneer of business to achieve a more compelling profile. In addition, my commute takes me quite a lot of time and I wanted to profit from my reading time while being on board of the train. Thus, getting an MBA made sense to me. Do I think of ever running my own business? Of course I do, although I also enjoy taking my job at the big company (which I like very much) as my own business, bearing in mind that my employer is my customer, and that I must always deliver a service a little over his expectations. In the end, it pays my bills, it allows me to reinvest in my own education, and I feel fulfilled with my work. However, in order to fully develop this compelling profile I'm seeking, I also reckon it is of utmost importance to take care of my online profile so as to explore new business opportunities and to make commitments to freelance projects on the side. This is great for getting better at what I do. I have long directed my efforts towards this goal, I have gathered positive feedback, and I am determined to follow this path. Before I bring this post to a close, I recently found a piece of evidence that supports my decision to enrol on a MBA in order to polish my professional profile. On this month's edition of Emprendedores mag there's an article that depicts some entrepreneurship ideas, and in addition to the ones directly related with tech consultancy and tech transfer, many of them are related with strategic management, financial management and customer management. It is to note that the three of them are comprised in the syllabus of an MBA. Post 83 Down to the nitty-gritty of graph adjacency implementation02-Sep-2013The core implementation of the adjacency scheme in a graphical abstract data type is a rich source of discussion. Common knowledge, i.e., Stackoverflow knowledge, indicates that a matrix is usually good if the graph in question is dense (squared space complexity is worth it at the advantage of random-access time complexity). Instead, if the graph is sparse, a linked list is more suitable (linear space complexity at the expense of linear-access time complexity). But is this actually a fair tradeoff? Part of these arguments is due to considering all of its elements internally or managing some of them externally. Matrix-based graphs are only fast to access if it is assumed that the storage positions for the nodes are already known. Since computers don't know (nor assume) things per se, they generally have to be instructed step by step. Thus, the matrix-based approach (i.e., the fastest to access) needs some sort of lookup table to keep track of the node identifiers and their associated storage positions (be it maintained internally or externally). Bearing in mind that storing and searching this dictionary introduces some complexity offsets, its impact must be taken into account in any scenario. Moreover, the list-based graph also needs this lookup table to be able to reference all its nodes unless it is asserted that the graph is always connected, so that every node is accessible from a single root node (which not very realistic, especially for directed graphs). Therefore, the choice of implementation does not simply seem to come down to a tradeoff between graph time and space complexities, but to a somewhat more blurry scenario involving the complexities of the auxiliary data structs. Let's dig into this. The goal of conducting this asymptotic analysis of graph adjacency implementation is to validate the following hypothesis: Matrix-based graphs are more effective than list-based graphs for densely connected networks, whereas list-based graphs are more effective than matrix-based graphs for sparsely connected networks. This is what is usually taken for granted, but it only works if the complexities introduced by the auxiliary (and necessary) data structs of the implementation are of a lesser order of magnitude than the default graph complexities. In order to carry out some experimentation, time and space complexities are taken for proxy effectiveness rates. A matrix-based and a list-based approaches are evaluated on some graph properties and functionalities (storage, node adjacency and node neighborhood functions) and on two topologies with different levels of connectivity. The sparse scenario is defined to be a tree and the dense scenario is defined to be a fully meshed network. Considering that V is the set of nodes (aka vertexes) and E is the set of edges, the aforementioned adjacencies are related as follows:

Now the typical complexities of the two implementations of graph adjacency are shown as follows:

Just for the record: without loss of generality, node degrees for a sparse graph are similar to 1, whereas node degrees for a dense graph are similar to the number of nodes |V|. And the complexities of some implementations tackling the dictionary problem:

It is to note that the dictionary implementation of use to relate node identifiers with storage positions introduces a diverse scenario of complexities. The implementation based on a hash table is the least intrusive with the graph complexities as the storage complexity is equivalent (or of lesser order) and it shows a random-access time complexity. Instead, the remaining two approaches do introduce some equivalent changes worsening the performance of the graph functions (the linear search for the double-list with unordered keys and the binary search for the ordered keys).

Conclusion Anyhow, in order to prevent premature optimization, TADTs implements a graphical abstract data type with a dictionary based on a linked list. This is good enough for most real-world scenarios. Post 82 A story about growing better tomatoes with genetic selection and other farming hacks14-Aug-2013This is a seasonal post about my grandfather's story and his relentless efforts to produce better tomatoes during his whole farming-labor activity. His goal is focused on manual genetic selection. My grandfather is a farming hacker, he doesn't know what this means, but I admire what he has always done. He has conducted this selection process over more than forty years, and now I have tomato seeds that develop into the sweetest tomatoes ever. I have now taken his token and continue his magical deed at a smaller scale, though. The whole process begins with sowing some tomato seeds in pots by the end of January, watering them about three times a week (constant humidity is important for germination) while keeping them in a warm place (indoors). By early March all plants should have sticked out of the turf and should keep growing until May, a time when they shall be replanted to a bigger piece of land, preferably a vegetable garden. Flowers should follow, pollination is automatic as the flowers are bisexual, and the red fruits culminate the cycle. At this point, all is set for some human intervention (otherwise nature tends to maximum entropy by growing a little bit of everything). Only the seeds from the best tomatoes (i.e., the ones with adequate size, intense rosy color, well-rounded shape, firm touch, delicate smell, sweet taste, ...) must be released from the pulp to be dried under the sun and to be safely stored for the next season (next year's summer). A good way to do it is by smashing the selected tomatoes, and leaving them in a jar with some water for 8-10 days so that the pulp rots releasing the seeds, which, by density, settle at the bottom of the container. This is an old farmer's advice for having excellent seeds. Then, by repeating this process over many years, the genetic content of selected ones is such that the resulting tomatoes have no equal in the market.

The only setback of this variety of tomatoes, which we call "pink-colored tomatoes" (see picture), is that the plants do not produce as many tomatoes (or pounds of tomatoes) as other hybridized varieties like bodar, for example. This is why the pink ones are rarely seen on the shelves of the grocer's. Nonetheless, many farmers I know do still cultivate some of them for personal delight in addition to the rest of the varieties for trading. However, the commercial viability of my grandfather's forgotten tomatoes is yet unknown. I can't presently afford to spend much on them so as to iterate them with the consumers, or with other producers (there is a lovely community of farmers on the outskirts of Barcelona that I see every day during my commute). I just grow a couple of plants at the roof of my house to maintain the wonderful seeds that my grandfather cared to select for such as long time. As a research engineer, I will definitely continue the manual selection process of tomato seeds, and measure as many variables as I can to quantitatively model and analyze the process. In the end, as Burrell Smith (designer of the Mac computer) put it at a hacker conference, hacking has to do with careful craftsmanship, and this is not limited to tinkering with high tech. In fact, there are already other personalities in this field with many interesting ideas in this regard. One of them is John Seymour. From his books I learned to revitalize plants with fermented nettle broth, to kill caterpillars with tobacco infusions, and to eliminate greenflies with basil and ladybugs. By applying these natural techniques I contribute to the enchanting and miraculous process of tomato improvement with ecologically-friendly methods free from pernicious chemicals. This is not a matter of fashion, we are what we eat, and fortunately more and more people nowadays adhere to hacking for a better food system beyond growing vegetables. Post 81 Facelifting my homepage while sticking to my long-term research/engineering projects: writing and coding20-Jul-2013The first point of this post is that I'm a little fed up with changing the style and layout of my homepage and I want to set on a clear line. I'm a little dazed and confused with so many tweaks, not to say what this can mean for my readers (I beg your understanding). Nonetheless, I usually despise the critique focuses on style first and content second. It is positive feedback from the latter that provided me some work as a freelance, for example. Anyhow, I need a sort of stationary brand that identifies me with my research engineer role. And lately that I had come up with a catchy title, "researchineering", that is the portmanteau of "research" and "engineering", the two terms that best define my professional career. I first thought this was great, but now I see this is overcomplicated, missing the simplicity goal and straightforwardness that I pursue. Now I just go with my bunny (that was a present drawn on the back cover of Steven Levy's masterpiece) and a most simple design: no more double content column, no search box, and no lousy online resume (I am a curious telecom engineer in Barcelona, I speak English and I code, further details, Linkedin). Sticking to a long-term project is one important determination. And here I am with my 5-years old homepage, right after having renewed the service contract with my provider. I'm ready to keep on sharing my thoughts, my rants... seeking to produce fruitful content that is of interest (at least to me), in addition to hosting online services that serve me well to validate business hypotheses with the real world (I don't want to live in a vacuum), and to improve my skills by iteration. I want to be constantly learning something new (sometimes re-learning for the sake of clarification), tackling challenging projects and reporting my experiments here. There is high correlation between good writing and good learning, because writing entails learning. However, I am not an assiduous writer yet (I still tend to code more that I write), but I'm trying to develop into one. I am deeply content with Google Reader shutting down because this forced me to clean the dust off my old rusty list of feeds (I had long wanted to so that but never found a moment to do it), and now I have a frightening amount of accumulated content that deserves my reading and posterior digestion here. In the end, writing is a creative process to acquire experience. It is a social activity that exposes one's work to others and reveals how to turn good products into great ones. But there is no creativity without facing the fear of failure. However, I guess the first step to overcome this fear is to maintain a publicly accessible blog. Done. But the challenge increases with time to write better and to keep up with the reader's expectations (well, I did mention I love challenging projects, so that's fine). Learning to write (and to speak publicly) is a requisite to become a good software engineer. This takes time and a lot of deliberate practice, just like programming. And to maintain the professional appeal, one must do something that obviously takes a lot of effort and time, showing one can do the job. Hence, there I go. Writing and coding are my reasons to be here. Post 80 Introducing VSMpy: a bare bones implementation of a Vector Space Model classifier in Python27-Jun-2013Following the good advice to publish old personal code projects to GitHub, this post introduces VSMpy. VSMpy is a bare bones Python package implementing a standard Vector Space Model classifier (i.e., binary-valued vectors from a Bag-Of-Words language model compared with the cosine similarity measure) in the context of a ready-to-distribute package. It illustrates:

In the end, this is almost the same as Bob Carpenter's (Alias-i, Inc) pyhi skeletal project distribution with more veneer of Text Classification. Nonetheless, a project like this one may come handy when starting something new from scratch with Python. Python is a wonderful and powerful programming language that's starting to take over mammoths like Matlab for scientific and engineering purposes (e.g., for signal processing), including Natural Language Processing. I use it extensively at work, and many of the suppliers I deal with do it as well. It is a great tool for product development to iterate fast and release often. Paul Graham noted it explicitly in his "Hackers and Painters" book: during the years he worked on Viaweb, he worried about competitors seeking Python programmers, because that sounded like companies where the technical side, at least, was run by real hackers. Post 79 New publication: Condition Based Maintenance On Board16-Jun-2013Rotating mechanical components are critical elements in the rail industry. A healthy condition of these mechanisms is vital to provide a reliable long-term service. In this regard, optimizing the corresponding maintenance operations with predictive technology offers many attractive advantages to operating and maintenance companies, being the security and the LCC (life cycle cost) improvement (economic savings) some of the most important criteria. To this end, this paper describes the ongoing research and development works for Prognosis and Health Management at ALSTOM Transport, named Condition Based Maintenance On Board (CBM OB). The system is coined CBM OB after its purpose to be of flexible and easy use and installation on moving train units. It describes a general-purpose framework, with an emphasis on data processing power. It is based on a Wireless Sensor Network that is able to monitor, diagnose and prognosticate the health condition of different mechanical elements. The architecture of this framework is modular by design in order to accommodate data processing modules fitting specific needs, adapted to the peculiarities of the problem under analysis and to the environmental conditions of the data acquisition. Empirical experimentation shows that CBM OB provides a detailed analysis that is equivalent to other commercial solutions even with stronger hardware equipment. This paper is to appear on September in the Chemical Engineering Transactions journal (vol. 33, 2013), and the reported CBM OB system is to be presented at the 2013 Prognostics and System Health Management Conference. Post 78 Gaining control over the tools: goodbye Google Reader25-May-2013Gaining control is one of the most important traits to acquire with one's career capital (Newport, 2012). Having a say in what one does and how one does it entails having the liberty to do so, and relying solely on Google Reader to be up to date with the posted news is too much of a risk to accept. Thus, I truly celebrate its decision to shut it down. That made me realize how much dependent on its service I was. Now I run Tiny Tiny RSS on my server and I feel I'm a lot wealthier than I was before (citing Paul Graham by the way) just because of my increased control over my tool. Since I mainly read about professionally-related topics like software development, machine learning and business management, this is to be taken seriously. Hosting one's own web services does cost some money, indeed. Nothing is for free, but freedom is priceless. There is no such thing as a "free web service" anyway. Users always pay with their trust and their personal information, which is then sold to advertising companies, like Google! Because Google is an advertising company, right? If Google can't deliver (ergo stuff) personalized ads in your browsing experience, then it must change its strategy. And it is determined to rule the computing world through Chrome. Thus, its products must be able to so, and Reader did not seem to do very good at this. Don't get me wrong, I believe Google provides wonderful service products developed by brilliant professionals, it just happens that I don't want to feel myself constrained by its business objectives. Therefore I decide to provide me my own tool to gain control and autonomy. Will we be seeing more actions like this one in the next months to come? Note in passing that Google Code has just deprecated download service for project hosting.

-- Post 77 Lean Startup hackers were already there back in the early eighties02-May-2013The fancy "lean" adjective that accompanies every rocking tech business issue nowadays is an already old story. I found it out the other day while skimming through Steven Levy's groundbreaking book "Hackers: Heroes of the Computer Revolution".

Many hackers of the Homebrew Computer Club (HCC) followed these lean principles as a means to avoid building things that no one really wanted or needed:

Considering that all these business approaches were raised during the recessionary period of the early eighties, and that they served the American economy very well, perhaps they should still be regarded of utmost importance nowadays. Post 76 NLP-Tools broadens its capabilities with a RESTful API service18-Apr-2013In the software tool development business, the API is the new language of the developers, i.e., the customers. In this regard, nlpTools keeps pace with the evolution of the industry market and introduces its RESTful API service to facilitate its integration. And in that quest for added-value and kaizen it partners with Mashape to handle the commercialisation issues. The original website still maintains the evaluation service, but further performance features now need to be routed through the Mashape nlpTools endpoint.

In the dark jungle of validated learning through product iteration nlpTools relies on the five keys that make a great API:

APIs may have nonetheless some caveats that could threaten the success of a project built around them, but most of them boil down to not having a paid option entailing a high quality of service. However, nlpTools does consider this commercial option and may thus scale up to the needs required by the developers by contracting more powerful hosting features. The added-value of the service (which is also its core business) lies in its customisation, that is its ability to adapt to the particularities of the developer's problem, such as the fitting to the specific salient characteristics that represent their data. Post 75 Foraging ants as living particle filters24-Feb-2013Ant colonies are admirable examples of cooperative societies. Some of its members are prepared to build their complex lairs, some others constitute an army to protect their population, some others explore the outer world and gather food, etc. With respect to the latter function, which to me is the most representative of ant colony behaviour, I coded a simple simulation in JavaScript inspired by the js1k competition (demo and code available here).

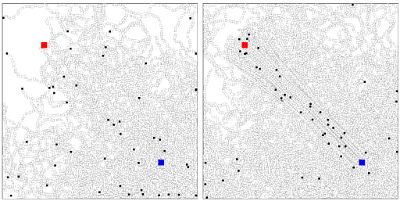

The ants in the app have been implemented following a state machine. Initially, they forage for food, drawing a random walk while they operate in this searching-state. Once they find a source of nurture, the ants transit to another state where they return home, leaving a pheromone trail behind for others to follow. Finally, they end up in a loop going back and forth collecting more food. And as time goes by, more and more ants flock to the food-fetching loop. Therefore, they get the job done more rapidly and minimise the danger of an outer menace. In a sense, foraging ants remind me of a particle filter where the particles are living beings moving stochastically to reach some objective. Thus, their behaviour could be cast as a biologically-inspired search algorithm for an optimisation procedure, considering that the objective is a cost function to be minimised. older - RSS - Search |

||||||||||||||||||||||||||||||

All contents © Alexandre Trilla 2008-2025 |