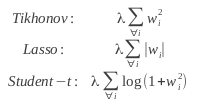

Blog-- Thoughts on data analysis, software development and innovation management. Comments are welcome Post 73 A New Year's resolution: get over specialisation and embrace generalisation to face real world industry problems01-Jan-2013Regularisation is a recurrent issue in Machine Learning (and so it is in this blog, see this post). Prof. Hinton also borrowed the concept in his neural networked view of the world, and used a shocking term like "unlearning" to refer to it. Interesting as it sounds, to achieve a greater effectiveness, one must not learn the idiosyncrasies of the data, one must remain a little ignorant in order to discover the true behaviour of the data. In this post, I revisit typical weight penalties like Tikhonov (L-2 norm), Lasso (L-1 norm) and Student-t (sum of logs of squared weights), which function as model regularisers:

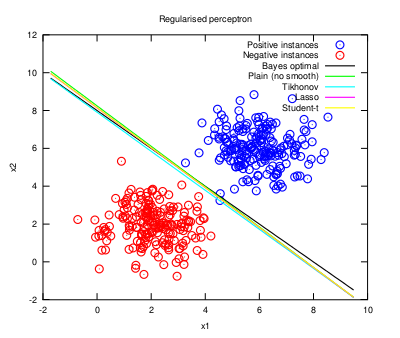

And their representation in the feature space is shown as follows (the code is available here; this time I used the Nelder-Mead Simplex algorithm to fit the linear discriminant functions):

As expected, the regularised models generalise better because they approach the optimal solution, although the differences are small for the problem at hand. Even more different regularisation proposals could still be suggested using model ensembles through bagging, dropout, etc, but are they indeed necessary? Does one really need to bother learning them? The obtained results are more or less the same, anyway. What is more, not every situation may come down to optimising a model with a fancy smoothing method. For example, you can refer to a discussion about product improvement in Eric Ries' "The Lean Startup" book (page 126, Optimisation Versus Learning), where optimising under great uncertainty can lead to a total useless product in addition to a big waste of time and effort (as the true objective function, i.e., the success indicator the product needs to become great, is unknown). And still further, not in the startup scenario but in a more established industry like the rail transport, David Briginshaw (Editor-in-Chief of the International Railway Journal, October 2012) wrote: "Specialisation leads to people becoming blinkered with a very narrow view of their small field of activity, which is bad for their career development, (...), and can hamper their ability to make good judgements." So, a lack of generalisation (as in happens with overfitted models) leads to a useless skewed vision of the world. Abraham Maslow already put it in different words: if you only have a hammer, you tend to see every problem as a nail. This reflection inevitably puts into scene the people who are at the crest of specialisation: the PhD's. Is there any place for them outside the fancy world of academia where they usually dwell and solve imaginary problems? Are they ready to face the real tangible problems (which are not only technical) commonly found in the industry? The world is harder to debug than any snippet of fancy code. Daniel Lemire long discussed these aspects and stated that training more PhD's in some targeted areas might fail to improve research output in these areas. Instead, creating new research jobs would be a preferable choice, as it is usually the case that academic papers do not suit many engineering needs and those fancy (reportedly enhanced) methods are thus never adopted by the industry. His articles are worth a read. Research is indeed necessary to solve real world problems, but it must be led by added-value objectives, lest it be of no use at all. Free happy-go-lucky research should not be a choice nowadays (has anyone heard of the financial abyss in academia?). |

All contents © Alexandre Trilla 2008-2025 |